Mysql碎片清理

为什么会产生碎片

简单的说,删除数据必然会在数据文件中造成不连续的空白空间,而当插入数据时,这些空白空间则会被利用起来.于是造成了数据的存储位置不连续,以及物理存储顺序与理论上的排序顺序不同,这种是数据碎片.实际上数据碎片分为两种,一种是单行数据碎片,另一种是多行数据碎片.前者的意思就是一行数据,被分成N个片段,存储在N个位置.后者的就是多行数据并未按照逻辑上的顺序排列.当有大量的删除和插入操作时,必然会产生很多未使用的空白空间,这些空间就是多出来的额外空间.索引也是文件数据,所以也会产生索引碎片,理由同上,大概就是顺序紊乱的问题.Engine 不同,OPTIMIZE 的操作也不一样的,MyISAM 因为索引和数据是分开的,所以 OPTIMIZE 可以整理数据文件,并重排索引。这样不但会浪费空间,并且查询速度也更慢。

https://www.cnblogs.com/xibuhaohao/p/10216078.html

碎片大小查看方法:

表逻辑空间大小(数据大小+索引大小):DATA_LENGTH+INDEX_LENGTH

表物理空间大小(frm+ibd):

碎片大小=逻辑大小-物理空间大小

清理方式

1.OPTIMIZE方式清理,需要停服务,清理时会锁表

2.使用pt-online-schema-change工具,可以不停服务清理

OPTIMIZE方式清理

查询碎片大小(不准确)

SELECT

TABLE_SCHEMA,

TABLE_NAME,

ENGINE,

concat( splinter, 'M' ) '碎片(M)'

FROM

(

SELECT

TABLE_SCHEMA,

TABLE_NAME,

ENGINE,

ROUND(( DATA_LENGTH + INDEX_LENGTH - TABLE_ROWS * AVG_ROW_LENGTH )/ 1024 / 1024 ) splinter

FROM

information_schema.`TABLES`

WHERE

table_schema NOT IN ( 'information_schema', 'mysql' )

) a

WHERE

splinter > 1

ORDER BY

splinter DESC清理sql

语法:

OPTIMIZE [LOCAL | NO_WRITE_TO_BINLOG] TABLE table_name1 , table_name2说明:

optimize table在操作的准备阶段和提交阶段期间短暂地进行独占表锁定,对于InnoDB表,OPTIMIZE TABLE映射到 ALTER TABLE ... FORCE,重建表以更新索引统计信息并释放聚簇索引中未使用的空间;

查询information_schema.FILES 的FILE_ID 和 information_schema.TABLES的CREATE_TIME 可知OPTIMIZE TABLE相当于表删除重建

optimize table的本质,就是alter table

mysql 5.5 的改表过程如下

1.创建一张新的临时表 tmp

2.把旧表锁住,禁止插入删除,只允许读写

3.把数据不断的从旧表,拷贝到新的临时表

4.等表拷贝完后,进行瞬间的rename操作

5.旧表删除掉

OPTIMIZE TABLE可以一次性对多个表进行碎片整理,只需要在OPTIMIZE TABLE后面接多个表名,并以英文逗号隔开即可

OPTIMIZE TABLE语句有两个可选的关键字:LOCAL和NO_WRITE_TO_BINLOG。在默认情况下,OPTIMIZE TABLE语句将会被记录到二进制日志中,如果我们指定了LOCAL或NO_WRITE_TO_BINLOG关键字,则不会记录。当然,一般情况下,我们也无需关注这两个关键字。

如果mysql是主从复制模式,不需要使用这两个关键字,master机器清理后slave机器会自动同步清理

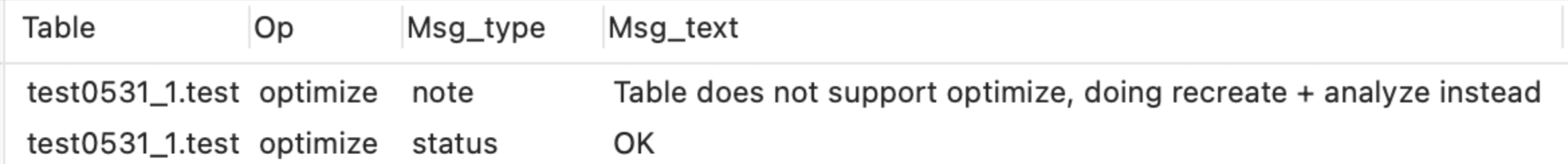

optimize table bitmap_enterprise.activity_pageuser,bitmap_enterprise.activity_pageuser_all;InnoDB引擎会返回如下语句,但是一样可以正常清理碎片

也可以直接使用下边的方式进行清理InnoDB引擎的表

ALTER TABLE tablename ENGINE=InnoDB注意事项

optimize table执行时会造成锁表,所以执行前一定要注意,不要有dml语句,保证业务暂停或切换

optimize table执行的时间会很长,表越大时间越长,执行前要考虑到时长的问题

pt-online-schema-change工具清理

安装:

安装前需要安装依赖

yum -y install perl-Digest-MD5 perl-TermReadKey perl-DBI perl-DBD-MySQL perl-Time- HiRes perl-IO-Socket-SSL gdb

安装命令

rpm -ivh percona-toolkit-3.3.1-1.el7.x86_64.rpm

使用方法:

pt-online-schema-change --user=${conn_user} --password=${conn_pwd} --host=${conn_host} P=3306,D=${conn_db},t=$table --charset=utf8 --alter="ENGINE=InnoDB" --nocheck-replication-filters --alter-foreign-keys-method=auto --execute > /home/hadoop/hcy/pt.log脚本(参数1为要清理的表名,脚本输出的表大小不准确)

#!/bin/bash

table=$1

conn_host='172.23.6.132'

conn_user='root'

conn_pwd='123456'

conn_db='databases_name'

startTime=`date +%s`

s_size=$(mysql -u$conn_user -p$conn_pwd -P3306 -h$conn_host information_schema -e "select concat(round(sum(DATA_LENGTH/1024/1024),2),'MB') as data from TABLES where table_schema=\"$conn_db\" and table_name=\"$table\"")

startSize=`echo $s_size|awk '{print $2}'`

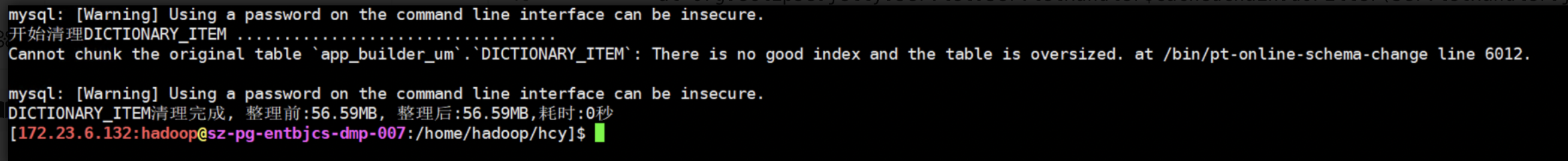

echo "开始清理$table .................................."

pt-online-schema-change --user=${conn_user} --password=${conn_pwd} --host=${conn_host} P=3306,D=${conn_db},t=$table --charset=utf8 --alter="ENGINE=InnoDB" --nocheck-replication-filters --alter-foreign-keys-method=auto --execute > /home/hadoop/hcy/pt.log

endTime=`date +%s`

e_size=$(mysql -u$conn_user -p$conn_pwd -P3306 -h$conn_host information_schema -e "select concat(round(sum(DATA_LENGTH/1024/1024),2),'MB') as data from TABLES where table_schema=\"$conn_db\" and table_name=\"$table\"")

endSize=`echo $e_size|awk '{print $2}'`

sumTime=$[ $endTime - $startTime ]该工具使用可能遇到的问题

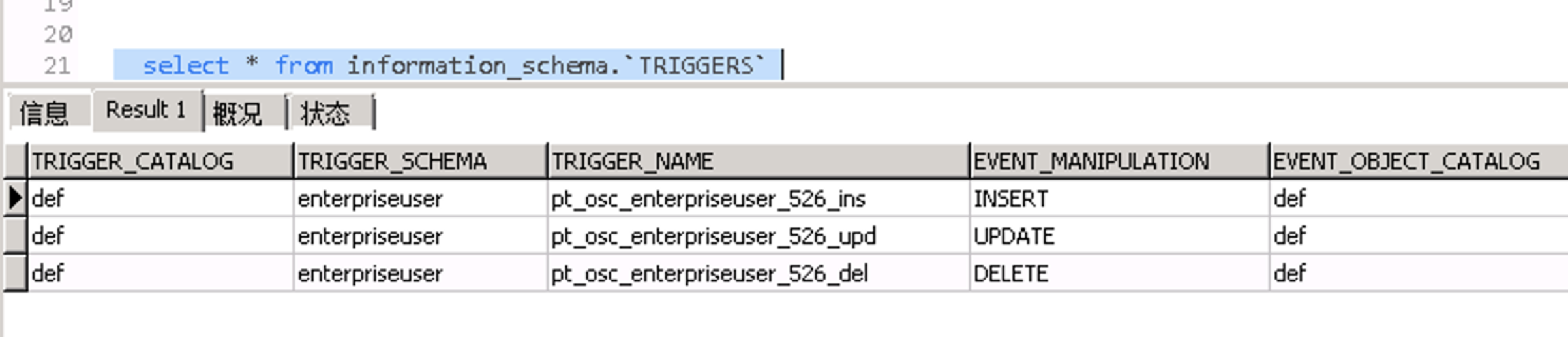

1.已存在触发器报错

清理前会对该表创建触发器,执行中意外中断时来不及删除已经创建的触发器,会导致再次运行时报错,如下

The table `enterpriseuser`.`526` has triggers but --preserve-triggers was not specified.

Please read the documentation for --preserve-triggers解决方法:

删除该工具创建的触发器

a. 查看所有触发器

select * from information_schema.`TRIGGERS`

b. 删除这三个触发器

DROP TRIGGER enterpriseuser.pt_osc_enterpriseuser_526_del

。。。

c. 重新运行该脚本

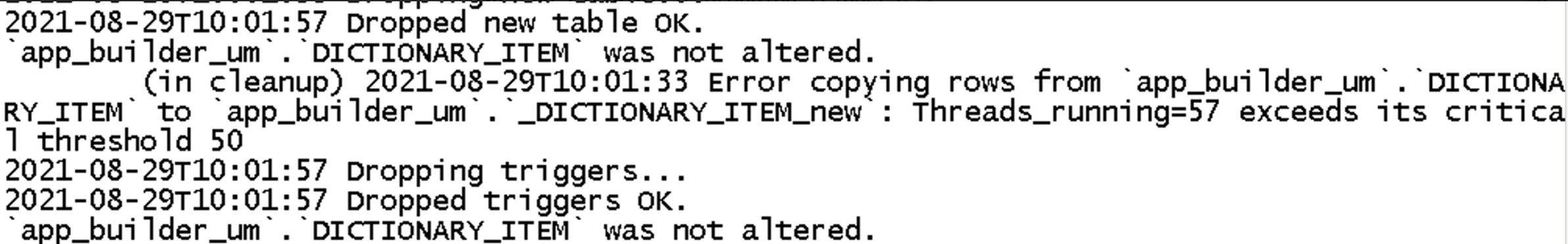

2.负载过高导致自动停止

报错信息

`app_builder_um`.`DICTIONARY_ITEM` was not altered.

(in cleanup) 2021-08-29T10:01:33 Error copying rows from `app_builder_um`.`DICTIONARY_ITEM` to `app_builder_um`.`_DICTIONARY_ITEM_new`: Threads_running=57 exceeds its critical threshold 50

2021-08-29T10:01:57 Dropping triggers...

2021-08-29T10:01:57 Dropped triggers OK.

`app_builder_um`.`DICTIONARY_ITEM` was not altered.加大这个阈值即可–critical-load Threads_running=400 加到400

--critical-load Threads_running=100

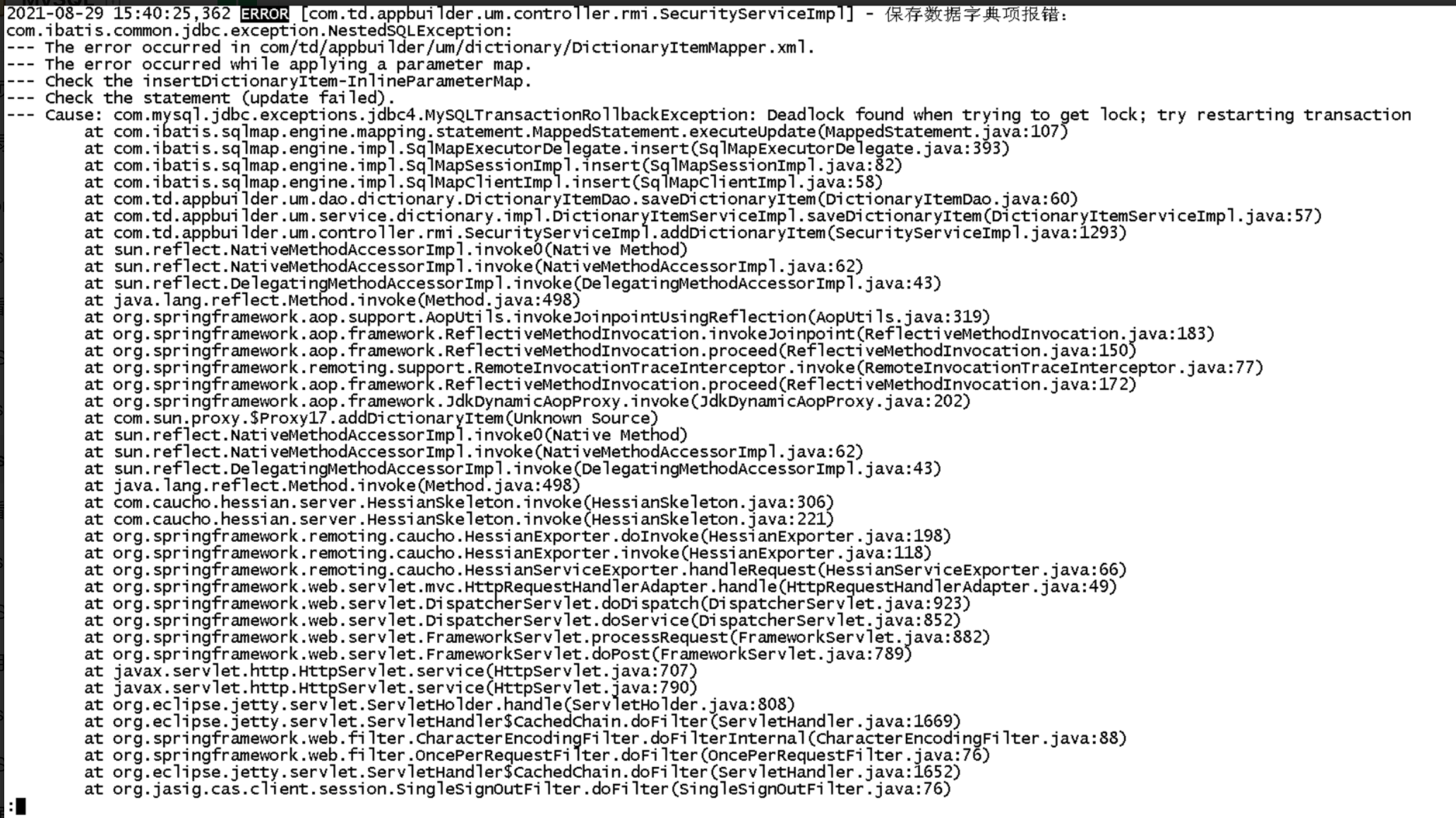

pt-online-schema-change --user=${conn_user} --password=${conn_pwd} --host=${conn_host} P=3306,D=${conn_db},t=$table --charset=utf8 --alter="ENGINE=InnoDB" --critical-load Threads_running=100 --nocheck-replication-filters --alter-foreign-keys-method=auto --execute > /home/hadoop/hcy/pt.log3.有可能造成死锁问题

2021-08-29 15:40:25,362 ERROR [com.td.appbuilder.um.controller.rmi.SecurityServiceImpl] - 保存数据字典项报错:

com.ibatis.common.jdbc.exception.NestedSQLException:

--- The error occurred in com/td/appbuilder/um/dictionary/DictionaryItemMapper.xml.

--- The error occurred while applying a parameter map.

--- Check the insertDictionaryItem-InlineParameterMap.

--- Check the statement (update failed).

--- Cause: com.mysql.jdbc.exceptions.jdbc4.MySQLTransactionRollbackException: Deadlock found when trying to get lock; try restarting transaction

at com.ibatis.sqlmap.engine.mapping.statement.MappedStatement.executeUpdate(MappedStatement.java:107)

at com.ibatis.sqlmap.engine.impl.SqlMapExecutorDelegate.insert(SqlMapExecutorDelegate.java:393)

at com.ibatis.sqlmap.engine.impl.SqlMapSessionImpl.insert(SqlMapSessionImpl.java:82)

at com.ibatis.sqlmap.engine.impl.SqlMapClientImpl.insert(SqlMapClientImpl.java:58)

at com.td.appbuilder.um.dao.dictionary.DictionaryItemDao.saveDictionaryItem(DictionaryItemDao.java:60)

at com.td.appbuilder.um.service.dictionary.impl.DictionaryItemServiceImpl.saveDictionaryItem(DictionaryItemServiceImpl.java:57)

at com.td.appbuilder.um.controller.rmi.SecurityServiceImpl.addDictionaryItem(SecurityServiceImpl.java:1293)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.springframework.aop.support.AopUtils.invokeJoinpointUsingReflection(AopUtils.java:319)

at org.springframework.aop.framework.ReflectiveMethodInvocation.invokeJoinpoint(ReflectiveMethodInvocation.java:183)

at org.springframework.aop.framework.ReflectiveMethodInvocation.proceed(ReflectiveMethodInvocation.java:150)

at org.springframework.remoting.support.RemoteInvocationTraceInterceptor.invoke(RemoteInvocationTraceInterceptor.java:77)

at org.springframework.aop.framework.ReflectiveMethodInvocation.proceed(ReflectiveMethodInvocation.java:172)

at org.springframework.aop.framework.JdkDynamicAopProxy.invoke(JdkDynamicAopProxy.java:202)

at com.sun.proxy.$Proxy17.addDictionaryItem(Unknown Source)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at com.caucho.hessian.server.HessianSkeleton.invoke(HessianSkeleton.java:306)

at com.caucho.hessian.server.HessianSkeleton.invoke(HessianSkeleton.java:221)

at org.springframework.remoting.caucho.HessianExporter.doInvoke(HessianExporter.java:198)

at org.springframework.remoting.caucho.HessianExporter.invoke(HessianExporter.java:118)

at org.springframework.remoting.caucho.HessianServiceExporter.handleRequest(HessianServiceExporter.java:66)

at org.springframework.web.servlet.mvc.HttpRequestHandlerAdapter.handle(HttpRequestHandlerAdapter.java:49)

at org.springframework.web.servlet.DispatcherServlet.doDispatch(DispatcherServlet.java:923)

at org.springframework.web.servlet.DispatcherServlet.doService(DispatcherServlet.java:852)

at org.springframework.web.servlet.FrameworkServlet.processRequest(FrameworkServlet.java:882)

at org.springframework.web.servlet.FrameworkServlet.doPost(FrameworkServlet.java:789)

at javax.servlet.http.HttpServlet.service(HttpServlet.java:707)

at javax.servlet.http.HttpServlet.service(HttpServlet.java:790)

at org.eclipse.jetty.servlet.ServletHolder.handle(ServletHolder.java:808)

at org.eclipse.jetty.servlet.ServletHandler$CachedChain.doFilter(ServletHandler.java:1669)

at org.springframework.web.filter.CharacterEncodingFilter.doFilterInternal(CharacterEncodingFilter.java:88)

at org.springframework.web.filter.OncePerRequestFilter.doFilter(OncePerRequestFilter.java:76)

at org.eclipse.jetty.servlet.ServletHandler$CachedChain.doFilter(ServletHandler.java:1652)

at org.jasig.cas.client.session.SingleSignOutFilter.doFilter(SingleSignOutFilter.java:76)执行过程有时候会造成死锁问题,暂时没找到解决办法

4.必须有主键索引或唯一索引才可以执行

There is no good index and the table is oversized